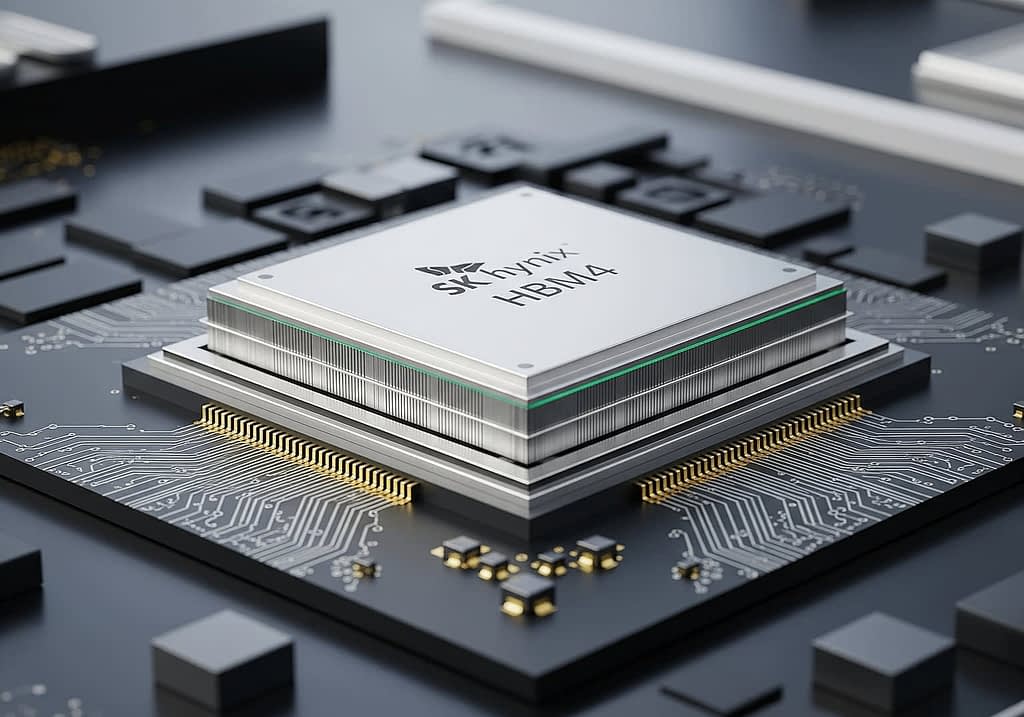

South Korean tech giant SK hynix has completed development of revolutionary HBM4 memory chips and prepared for mass production. The new generation achieves double the bandwidth compared to its predecessor and can boost AI service performance by up to 69 percent while simultaneously reducing energy consumption by more than 40 percent.

HBM4 Breaks Barriers: Double Performance for AI

Imagine ChatGPT responding twice as fast, or a data center consuming 40 percent less energy while maintaining the same performance. This isn’t science fiction, but the reality that SK hynix’s HBM4 brings. While conventional DRAM memories are like individual books on a shelf, HBM functions like a skyscraper full of libraries – vertically stacked chips connected by ultra-fast elevators.

The key breakthrough is the expansion to 2048 I/O terminals, which in practice means double the width of the data “highway” compared to HBM3E. For AI applications, this means an end to bottlenecks that currently slow down even the most expensive accelerators.

Specific advantages for various sectors:

- AI model training: 69 percent faster data processing

- Data centers: Over 40 percent energy savings

- Graphics computing: Smoother rendering of complex scenes

- Scientific simulations: Faster processing of massive datasets

Speed That Exceeds Standards: 10 Gb/s Versus Expectations

While the JEDEC organization cautiously standardized HBM4 at 8 Gb/s per pin, SK hynix went further and exceeded the 10 Gb/s barrier. It’s like Formula 1 setting a speed limit of 300 km/h, but one team managing to safely drive at 375 km/h.

This result was enabled by combining fifth-generation 10nm technology (1bnm) with the revolutionary MR-MUF process. Simply put, liquid protective material is inserted between individual chip layers, which then hardens and creates perfect insulation. The result? More stable operation, better heat dissipation, and most importantly, the possibility of mass production without compromises.

SK hynix already began shipping samples of its twelve-layer HBM4 chips to key customers in March 2025. Now it has a complete production line ready – which means a crucial advantage in the competitive battle.

Battle of Three Giants: Who Will Win the Race to the Future

A fascinating drama between three main players is unfolding in the HBM market. SK hynix currently holds the royal throne with a 66 percent market share, but Samsung and Micron aren’t sleeping. Analysts at Mizuho even warn of a two-horse race scenario, similar to HBM3E, where SK hynix and Micron left Samsung behind.

Samsung is betting on even more advanced 1c-nm technology, but is currently dealing with delays. It promises to deliver samples next year – in the tech world, a year can be an eternity. Micron is also already shipping HBM4 samples, planning mass production around 2026. For investors, this means SK hynix has a window of opportunity it can use to strengthen its dominance.

Competitive strategies of individual players:

- SK hynix: First to market with mass production, “first mover” advantage

- Samsung: Betting on more advanced technology, but with delay risks

- Micron: More conservative approach, but stable deliveries from 2026

Nvidia as the Golden Vein: Demand That Never Sleeps

The biggest driver for the HBM market remains Nvidia, which continuously increases memory capacity requirements for its accelerators. Each new GPU generation needs more HBM than the previous one, this is a trend that shows no signs of slowing down yet.

HBM4 represents a premium product with significantly higher prices than standard DRAM. For SK hynix, this opens a path to better profitability while maintaining volumes. While regular DRAM sells for units of dollars, HBM4 chips reach hundreds of dollars per piece.

Stock Success Reflects Long-term Potential

Investors reacted enthusiastically to the HBM4 announcement. SK hynix shares (HY9H.F) climbed to historic highs during September 2025, reflecting confidence in the company’s technological leadership. The market values not only the technical advantage, but primarily the certainty of future revenues from key customers like Nvidia.

The company finds itself in a key position during the AI revolution thanks to HBM4 technology. It combines technological superiority with readiness for mass production precisely at a time when demand for high-performance memory is exploding.

Risks certainly exist. Production complexity can lead to lower yields, which would temporarily limit supply. Intensifying competition and possible price pressure from customers like Nvidia may gradually compress margins. Nevertheless, positive factors prevail: technological advantage, readiness for mass production, and growing global demand for AI infrastructure.

The company with ticker symbol HY9H.F thus represents one of the key players in the memory technologies segment for AI infrastructure. HBM4 is not just a technological breakthrough, but also a strong fundamental story that could influence the company’s market position in the coming years.